A multidisciplinary team of UNC-Chapel Hill researchers received a grant to support research on how to monitor and provide feedback to post-treatment cancer patients, using a combination of radio and audio sensing technologies.

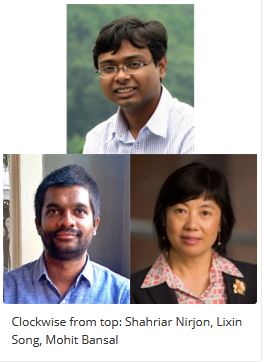

Led by assistant professor Shahriar Nirjon (computer science), the team was awarded a National Institute of Health (NIH) R01 grant worth $941,000 over four years.

The project, dubbed “AURA” (a combination of AUdio and RAdio), is a collaboration between Nirjon and Mohit Bansal in the department of computer science and Lixin Song in the School of Nursing.

The project, dubbed “AURA” (a combination of AUdio and RAdio), is a collaboration between Nirjon and Mohit Bansal in the department of computer science and Lixin Song in the School of Nursing.

Existing audio-based voice assistant devices, such as Amazon Echo and Google Home, will be augmented with radio-based RF sensing technologies, enabling the devices to record more information from their surroundings and provide contextually relevant feedback to the patient. The device will combine audio and radio sensing to gather relevant patient information automatically and interactively, and store patient’s health records into an electronic system used by the entire care team, including the patient, family members, caregivers and healthcare providers at remote sites. The project will study the performance of AURA when deployed in the homes of post-surgery colorectal and bladder cancer patients, but AURA’s design is generic and extendable to meet the requirements of a wide range of post-treatment self-care scenarios.

The grant is awarded through a cross-agency program called the National Science Foundation Smart and Connected Health (NSF SCH) program. It is an extremely competitive program, the purpose of which is to support the development of technologies, analytics and models supporting next-generation health and medical research through high-risk, high-reward advances in computer and information science, engineering and technology, behavior and cognition. Throughout the four-year project, Nirjon will lead research on radio-frequency-based human activity recognition, Bansal will lead research on natural language feedback and Song will lead the study of post-treatment colorectal and bladder cancer patients using the developed technology.